DuckDNS has long enough latency (over 2000ms) where Google Assistant can’t connect. I moved to FreeDDNS and my Home Assistant issues went away.

DuckDNS has long enough latency (over 2000ms) where Google Assistant can’t connect. I moved to FreeDDNS and my Home Assistant issues went away.

The point is to show it’s uncapped, since SDR is just up to 200 not. It’s not tonemapped in the image.

But, please, continue to argue in bad faith and complete ignorance.

This is a trash take.

I just wrote the ability to take a DX9 game, stealthy convert it to DX9Ex, remap all the incompatibility commands so it works, proxy the swapchain texture, setup a shared handle for that proxy texture, create a DX11 swapchain, read that proxy into DX11, and output it in true, native HDR.

All with the assistance of CoPilot chat to help make sense of the documentation and CoPilot generation and autocomplete to help setup the code.

All in one day.

Only reason I haven’t modded HDR for this game is because it’s DX9 and a pain to mod. (I already did GTAV - Enhanced and GTA Trilogy Remastered since it’s UE). If they make a new port for PC it’ll be able to complete the set.

Definitely not. NoJS is not better for accessibility. It’s worse.

You need to set the ARIA states over JS. Believe me, I’ve written an entire component library with this in mind. I thought that NoJS would be better, having a HTML and CSS core and adding on JS after. Then for my second rewrite, I made it JS first and it’s all around better for accessibility. Without JS you’d be leaning into a slew of hacks that just make accessibility suffer. It’s neat to make those NoJS components, but you have to hijack checkbox or radio buttons in ways not intended to work.

The needs of those with disabilities far outweigh the needs of those who want a no script environment.

While with WAI ARIA you can just quickly assert that the page is compliant with a checker before pushing it to live.

Also no. You cannot check accessibility with HTML tags alone. Full stop. You need to check the ARIA tags manually. You need to ensure states are updated. You need to add custom JS to handle key events to ensure your components work as suggested by the ARIA Practices page. Relying on native components is not enough. They get you somewhere there, but you’ll also run into incomplete native components that don’t work as expected (eg: Safari and touch events don’t work the same as Chrome and Firefox).

The sad thing is that accessibility testing is still rather poor. Chrome has the best way to automate testing against the accessibility tree, but it’s still hit or miss at times. It’s worse with Firefox and Safari. You need to doubly confirm with manual testing to ensure the ARIA states are reported correctly. Even with attributes set correctly there’s no guarantee it’ll be handled properly by browsers.

I have a list of bugs still not fixed by browsers but at least have written my workarounds for them and they are required JS to work as expected and have proper accessibility.

Good news is that we were able to stop the Playwright devs from adopting this poor approach of relying on HTML only for ARIA testing and now can take accessibility tree snapshots based on realtime JS values.

Rip iPhone.

Maybe a chronograph or piechart and calling it Snapshot?

Loading would be rewinding, going back in time, so with a counterclockwise arrow?

scrape.maxDepth = 5

Seriously. They probably sell real dry pizza. Pineapple without enough tomato sauce on “pizza” is trash.

Tunic

Sea of Stars

I have just dumped code into a Chrome console and saved a cert while in a pinch. It’s not best practices of course, but when you need something fast for one-time use, it’s nice to have something immediately available.

You could make your own webpage that works in the browser (no backend) and make a cert. I haven’t published anything publicly because you really shouldn’t dump private keys in unknown websites, but nothing is stopping you from making your own.

That’s what NodeJS and Deno are.

The point of the browser support means it runs on modern Web technologies and doesn’t need external binaries (eg: OpenSSL). It can literally run on any JS, even a browser.

Just going to mention my zero-dependency ACME (Let’s Encrypt) library: https://github.com/clshortfuse/acmejs

It runs on Chrome, Safari, FireFox, Deno, and NodeJS.

I use it to spin up my wildcard and HTTP certificates. I’ve personally automated it by having the certificate upload to S3 buckets and AWS Certificates. I wrote a helper for Name.com for DNS validation. For HTTP validation, I use HTTP PUT.

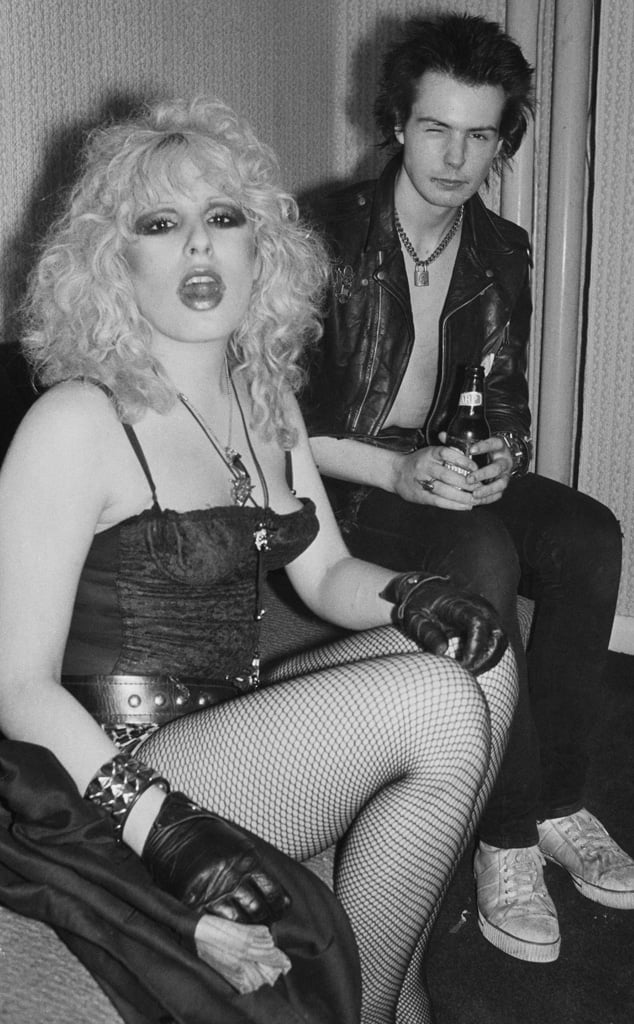

I mean, you can find 70s punk images just like this. I’m sure some are already great-grand parents. Nancy and Sid are from 1977:

Don’t use JSON for the response unless you include the response header to specify it’s application/json. You’re better off with regular plaintext unless the request header Accept asked for JSON and you respond with the right header.

That also means you can send a response based on what the request asked for.

403 Forbidden (not Unauthorized) is usually enough most of the time. Most of those errors are not meant for consumption by an application because it’s rare for 4xx codes to have a contract. They tend to go to a log and output for human readers later, so I’d lean on text as default.

I’ve also used .local but .local could imply a local neighborhood. The word itself is based on “location”. Maybe a campus could be .local but the smaller networks would be .internal

Or, maybe they want to not confuse it with link-local or unique local addresses. Though, maybe all .internal networks should be using local (private) addresses?

I’ve been using uBOLite for about a year and I’m pretty happy with it. You don’t have to give the extension access to the content on the page and all the filtering on the browser engine, not over JavaScript.

I just recently started working with ImGui. Rewrite compiled game engines to add support for HDR into games that never supported it? Sure, easy. I can mod most games in an hour if not minutes.

Make the UI respond like any modern flexible-width UI in the past 15 years? It’s still taking me days. All of the ImGui documentation is hidden behind closed GitHub issues. Like, the expected user experience is to bash your head against something for hours, then submit your very specific issue and wait for the author to tell you what to do if you’re lucky, or link to another issue that vaguely resembles your issue.

I know some projects, WhatWG for one, follow the convention of, if something is unclear in the documentation, the issue does not get closed until that documentation gets updated so there’s no longer any ambiguity or lack of clarity.

If you’re talking browsers it’s poor. But HDR on displays is very much figured out and none of the randomness that you get with SDR with user varied gamma, colorspace, and brightness. (That doesn’t stop manufacturers still borking things with Vivid Mode though).

You can pack HDR in JPG/PNG/WebP or anything that supports a ICC and Chrome will display it. The actual formats that support HDR directly are PNG (with cICP) and AVIF and JpegXL.

Your best bet is use avifenc and translate your HDR file. But note that servers may take your image and break it when rescaling.

Best single source for this info is probably: https://gregbenzphotography.com/hdr/