You lost me at shitting on legacy code. My brother in Tux, we don’t rewrite code willy-nilly in the FOSS world either and for a good reason. New code always means new bugs. A shit ton of the underlying code in your Linux OS was written one or more decades ago.

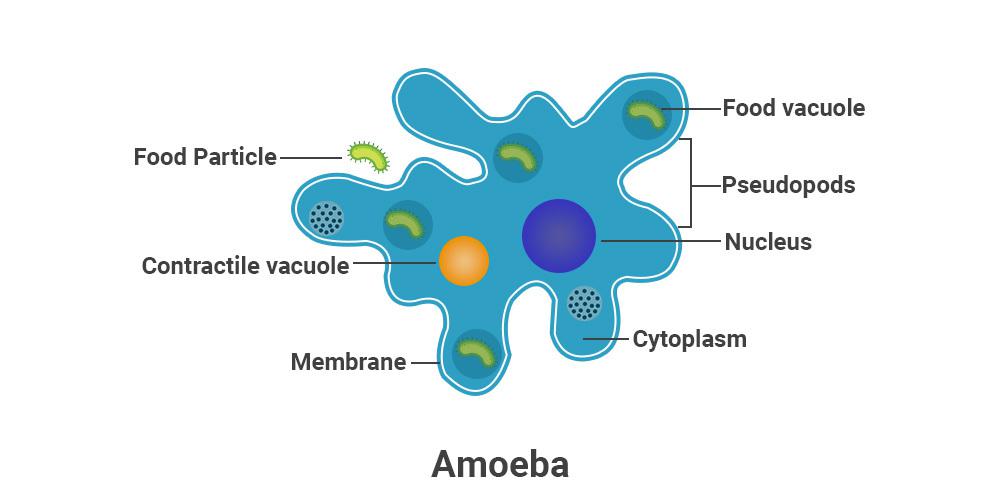

Avid Amoeba

- 26 Posts

- 940 Comments

68·21 hours ago

68·21 hours agoThis is because employees in South Korea can “only” work a maximum of 52 hours per week, including twelve hours of overtime. As a result, employees often have to leave work and go home even when important tasks have not yet been completed. For this reason, key employees of the Exynos team are reported to have worked unpaid overtime more and more frequently over the past few years, with the extra hours going unrecorded.

Why is SK’s birth rate in the shitter.

4·1 day ago

4·1 day agoGiven everything we’ve seen over theast little while, including the process of non-profits getting taken over by their VC funded subsidiaries; that difference you see is almost certainly a matter of being at a different point in their respective profit timelines.

3·2 days ago

3·2 days agoThe author explains in the thread and has links to further info.

Alright. Thanks!

So just wait and don’t apply this, correct?

Unfused. Probes/cables began rapidly heating up. I violently shook the the battery to break off the probes from it.

Probe tips instantly welded on a nanophosphate A123 battery.

3·3 days ago

3·3 days agoIt’s a trap.

- A message from Canada

2·6 days ago

2·6 days agoYou tap search on the home screen, you get the Google app.

111·6 days ago

111·6 days agoIt’s happening via the Google app.

2·7 days ago

2·7 days agoI find the intermediary classification a bit unconvincing and perhaps unintentionally misleading. It sounds like a nice framework to look at the world and it does describe the particular domain alright and it allows for drawing useful conclusions. Unfortunately solving the problems it highlights would produce marginal gains because I think intermediaries as described are just a special case of something more general. Firms of any kind are acting as intermediaries in the exchange of the products of people’s labor. The effects are all the same, these intermediaries make the exchange easier at the expense of keeping some of the labor products from one end or the other, but usually both. It seems to me that the problem of the platform intermediaries power is just a special case of the power of firms over labor. Which really reduces to the problem of the power of capital over labor. If we somehow solve the platform intermediaries problem, we leave the general problem unsolved. And then if we don’t think in terms of the general problem, we can’t even solve the special problem because the tools needed are controlled by capital. That is the lawmakers who could change the law are paid by the powerful intermediaries (firms) and not by the people on either end of the intermediaries. If we hope to ever solve any of this I think we have to look at the world through the general lens and focus on ways to reduce the amount of capital accumulated by firms from people’s labor. Fortunately there are well known solutions for that and they’re actionable for most people.

6·10 days ago

6·10 days ago- camera sensors (larger and more expensive)

- screen (very high brightness)

- processor/SoC (faster, has 7 years of driver support)

- open source support (can build your own AOSP ROM or use Graphene, etc)

I see. Makes sense.

115·10 days ago

115·10 days agoThis is the right way to optimize performance. Write everything in a decent higher level language, to achieve good maintainability. Then profile for hotspots, separate them in well defined modules and optimize the shit out of them, even if it takes assembly inlining. The ugly stays its own box and you don’t spend time optimizing stuff that doesn’t need optimization.

101·15 days ago

101·15 days agoWhile not entirely wrong, I’d take anything out of free market fundamentalists mouths like the ones at Mises with a gain of salt.

3·16 days ago

3·16 days agoOh this is very interesting!

Every time I hear a junior developer say we should rewrite something they have made 0.1 effort understanding, I thank the JS world for not giving a generation or two of developers a well thought out application development framework.