Stupid article needs a before and after comparison.

Instead it has way too many ads.

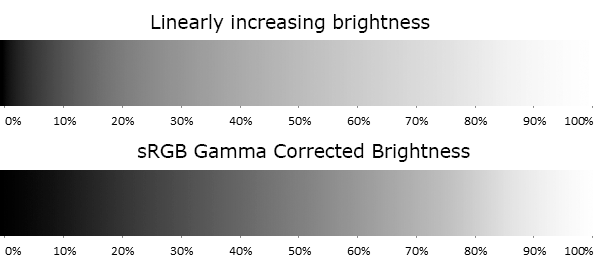

“It’s a bit technical,” begins Birdwell, "but the simple version is that graphics cards at the time always stored RGB textures and even displayed everything as non linear intensities, meaning that an 8 bit RGB value of 128 encodes a pixel that’s about 22% as bright as a value of 255, but the graphics hardware was doing lighting calculations as though everything was linear.

“The net result was that lighting always looked off. If you were trying to shade something that was curved, the dimming due to the surface angle aiming away from the light source would get darker way too quickly. Just like the example above, something that was supposed to end up looking 50% as bright as full intensity ended up looking only 22% as bright on the display. It looked very unnatural, instead of a nice curve everything was shaded way too extreme, rounded shapes looked oddly exaggerated and there wasn’t any way to get things to work in the general case.”

Stupid article needs a before and after comparison.

Instead it has way too many ads.

This should have been easy enough to illustrate.

Edit: Here is a greyscale illustration of a similar phenomenon:

From https://www.odelama.com/photo/Developing-a-RAW-Photo-by-hand/

Of course in reality it get a bit more complex when we perceive colors as having different brightness too:

From https://www.vis4.net/blog/avoid-equidistant-hsv-colors/

This is amazing! I wish there was something akin to Lemmy Gold! Lemmy give it to ya!

EDIT I’ve posted this comment to bestoflemmy

Lemmy Gold you say?

I’m pretty sure you just won comment of the year! This is great and very helpful.

The latter is solved by better colorspaces, like OKLAB.